Neuroscience researchers have taken on the task of exploring how neural activity is measured for those who have limited to no speaking ability at all. In cases of neurological disease, impairment to the nervous system may cause paralysis to muscles required to speak, as seen in patients with Charcot’s disease. For individuals who suffer a stroke, other parts of the brain may be impacted that affect language. In many of these cases, although the patients are not able to articulate and speak words, partial functionality may exist for these patients to imagine the words and sentences. It is this “imagined speech” researchers are wanting to gain further insight into.

Researchers from the Department of Basic Neuroscience at the UNIGE Faculty of Medicine, including scientist, Timothee Proix, professor, Anne-Lise Giraud, and assistant professor, Pierre Megevand, have found that although previous studies have been able to decode spoken language, it is more difficult to decode imagined speech as the neural signals in the brain are weaker and vary more than explicit speech.

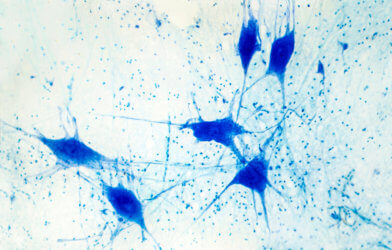

Sounds are emitted when a person speaks, and these sounds can be measured and elements may be traced back to specific regions of the brain. In contrast, with imagined speech, the words and sentences are produced internally, making them difficult to measure. Less areas of the brain are engaged, making it challenging for researchers to compare patterns of those with normal speech behavior.

The UNIGE team conducted a study with thirteen hospitalized patients treated for epilepsy. In collaboration with two American hospitals, researchers observed data collected through electrodes that had been directly implanted into the brains of these individuals. “We asked these people to say words and then to imagine them. Each time, we reviewed several frequency bands of brain activity known to be involved in language,” says Anne-Lise Giraud, a professor in the Department of Basic Neuroscience at the UNIGE Faculty of Medicine, and newly appointed director of the Institut de l’Audition in Paris, in a statement.

Several types of frequencies were observed when patients spoke, whether orally or internally. Among the frequencies observed were theta waves (4-8Hz), which are linked to the average rhythm of syllable articulation. Gamma frequencies (25-35Hz) were observed in parts of the brain where speech sounds are formed. Beta waves (12-18 Hz) were measured in parts of the brain where cognition is tied to speech.

Predicting the evolution of a conversation is an example. The highest frequencies (80-150 Hz) were measured when a person speaks out loud. Lower frequencies, such as beta and gamma, were found to have essential information needed to decode imagined speech.

The temporal cortex was identified as an important region of the brain to observe when decoding imagined speech. The left lateral part of the brain is responsible for processing information as it relates to hearing and memory. This area also houses a region of the brain called Wernicke area, which contains neurons responsible for comprehension of speech.

Reconstructing internal speech to measure neural activity provided useful information on how the brain functions. Researchers hope to be able to decode imagined language with greater accuracy and to learn more about the effects neurodegenerative diseases have on spoken and internal speech in the future.

Findings from this study were first published in the journal Nature Communications.

Article written by Elizabeth Bartell