Article written by Stephen Badham, Nottingham Trent University

We often assume young people are smarter, or at least quicker, than older people. For example, we’ve all heard that scientists, and even more so mathematicians, carry out their most important work when they’re comparatively young.

But my new research, published in Developmental Review, suggests that cognitive differences between the old and young are tapering off over time. This is hugely important as stereotypes about the intelligence of people in their sixties or older may be holding them back – in the workplace and beyond.

Cognitive aging is often measured by comparing young adults, aged 18-30, to older adults, aged 65 and over. There are a variety of tasks that older adults do not perform well on compared to young adults, such as memory, spatial ability and speed of processing, which often form the basis of IQ tests. That said, there are a few tasks that older people do better at than younger people, such as reading comprehension and vocabulary.

Declines in cognition are driven by a process called cognitive aging, which happens to everyone. Surprisingly, age-related cognitive deficits start very early in adulthood, and declines in cognition have been measured as dropping in adults as young as just 25.

Often, it is only when people reach older age that these effects add up to a noticeable amount. Common complaints consist of walking into a room and forgetting why you entered, as well as difficulty remembering names and struggling to drive in the dark.

The trouble with comparison

Sometimes, comparing young adults to older adults can be misleading though. The two generations were brought up in different times, with different levels of education, healthcare and nutrition. They also lead different daily lives, with some older people having lived though a world war while the youngest generation is growing up with the internet.

Most of these factors favor the younger generation, and this can explain a proportion of their advantage in cognitive tasks.

Indeed, much existing research shows that IQ has been improving globally throughout the 20th century. This means that later-born generations are more cognitively able than those born earlier. This is even found when both generations are tested in the same way at the same age.

Currently, there is growing evidence that increases in IQ are leveling off, such that, in the most recent couple of decades, young adults are no more cognitively able than young adults born shortly beforehand.

Together, these factors may underlie the current result, namely that cognitive differences between young and older adults are diminishing over time.

New results

My research began when my team started getting strange results in our lab. We found that often the age differences we were getting between young and older adults was smaller or absent, compared to prior research from early 2000s.

This prompted me to start looking at trends in age differences across the psychological literature in this area. I uncovered a variety of data that compared young and older adults from the 1960s up to the current day. I plotted this data against year of publication, and found that age deficits have been getting smaller over the last six decades.

Next, I assessed if the average increases in cognitive ability over time seen across all individuals was a result that also applied to older adults specifically. Many large databases exist where groups of individuals are recruited every few years to take part in the same tests. I analyzed studies using these data sets to look at older adults.

I found that, just like younger people, older adults were indeed becoming more cognitively able with each cohort. But if differences are disappearing, does that mean younger people’s improvements in cognitive ability have slowed down or that older people’s have increased?

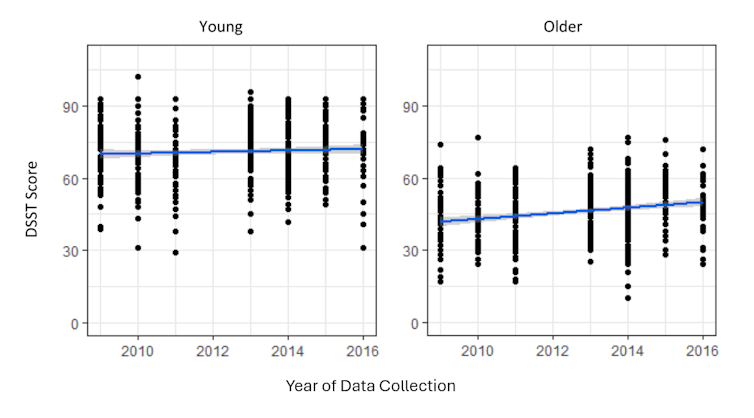

I analysed data from my own laboratory that I had gathered over a seven-year period to find out. Here, I was able to dissociate the performance of the young from the performance of the older. I found that each cohort of young adults was performing to a similar extent across this seven-year period, but that older adults were showing improvements in both processing speed and vocabulary scores.

I believe the older adults of today are benefiting from many of the factors previously most applicable to young adults. For example, the number of children who went to school increased significantly in the 1960s – with the system being more similar to what it is today than what it was at the start of the 20th century.

This is being reflected in that cohort’s increased scores today, now they are older adults. At the same time, young adults have hit a ceiling and are no longer improving as much with each cohort.

It is not entirely clear why the young generations have stopped improving so much. Some research has explored maternal age, mental health and even evolutionary trends. I favor the opinion that there is just a natural ceiling – a limit to how much factors such as education, nutrition and health can improve cognitive performance.

These data have important implications for research into dementia. For example, it is possible that a modern older adult in the early stages of dementia might pass a dementia test that was designed 20 or 30 years ago for the general population at that time.

Therefore, as older adults are performing better in general than previous generations, it may be necessary to revise definitions of dementia that depend on an individuals’ expected level of ability.

Ultimately, we need to rethink what it means to become older. And there’s finally some good news. Ultimately, we can expect to be more cognitively able than our grandparents were when we reach their age.

Stephen Badham is a Professor of Psychology at Nottingham Trent University

This article is republished from The Conversation under a Creative Commons license. Read the original article.