Artificial intelligence could be your next master sommelier. Scientists at the National Institute of Standards and Technology and their collaborators developed a wine-centric neural network that uses less energy and operate more quickly than typical AI programs.

Artificial intelligence consumes much more energy than the human brain to perform tasks. The brain consumes an estimated average of 20 watts of power while making calculations, as AI systems use thousands of times that. The AI hardware also lags, making it slower, less efficient and less effective than human brains. That’s why researchers are studying and developing less energy-intensive alternatives.

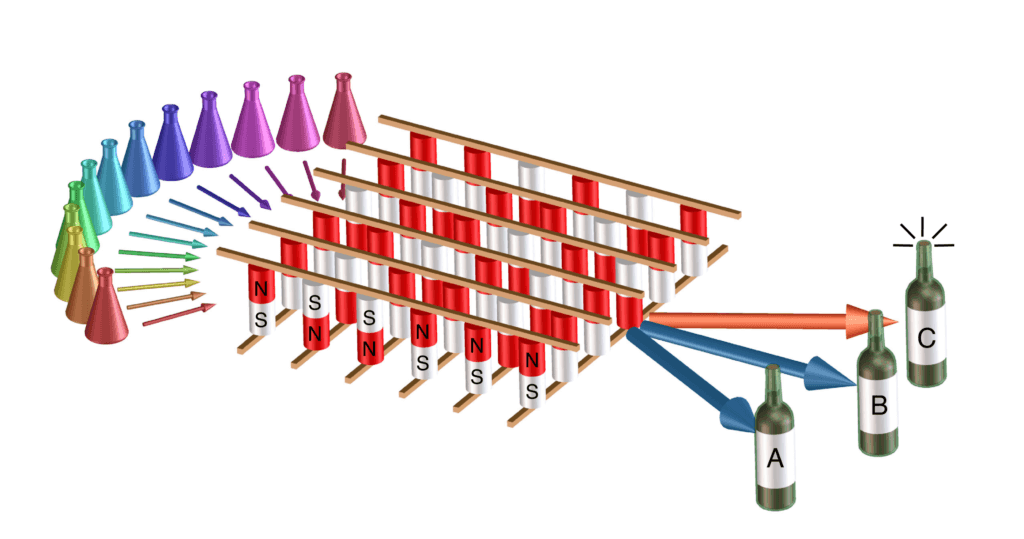

Magnetic tunnel junction, or MTJ, is one alternative device that is showing promise. MTJ only needs a few sips of energy and is good at the kinds of math a neural network uses. MTJs use several times less energy than their traditional AI hardware counterparts and can also operate more quickly because they store data in the same place they do their computation.

To find out if an array of MTJs could work as a neural network, scientists decided to do a virtual wine-tasting. Scientists trained the AI system’s virtual palate using 148 of the wines from a dataset of 178 made from three types of grapes. Each virtual wine had 13 characteristics, including alcohol level, color, flavonoids, ash, alkalinity and magnesium. The characteristics were assigned a value between 0 and 1 for the network to consider when distinguishing one wine from the others.

“It’s a virtual wine tasting, but the tasting is done by analytical equipment that is more efficient but less fun than tasting it yourself,” says NIST physicist Brian Hoskins in a statement.

The AI system was then given a virtual wine-tasting test on the full dataset, which included 30 wines it hadn’t seen before, and passed with a 95.3% success rate. It only made two mistakes out of the 30 wines it hadn’t trained on.

NIST physicist Jabez McClelland called it a good sign. “Getting 95.3% tells us that this is working,” says McClelland.

This type of success shows that an array of MTJ devices could be scaled up and used to build new AI systems. “While the amount of energy an AI system uses depends on its components, using MTJs as synapses could drastically reduce its energy use by half if not more, which could enable lower power use in applications such as ‘smart’ clothing, miniature drones, or sensors that process data at the source,” the media release reads.

“It’s likely that significant energy savings over conventional softwares-based approaches will be realized by implementing large neural networks using this type of array,” explains McClelland.

The study is published in the journal Physical Review Applied.