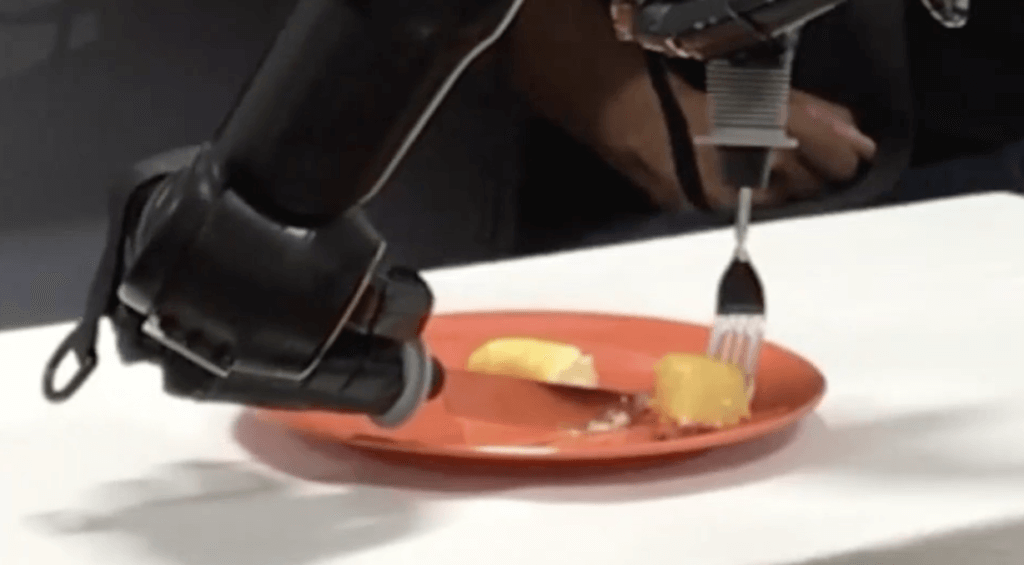

For the first time in 30 years, a partially paralyzed man was able to feed himself by using his mind and robotic arms. This innovative brain-machine interface technology comes from researchers at the Johns Hopkins Applied Physics Laboratory (APL) and the Department of Physical Medicine and Rehabilitation in the Johns Hopkins School of Medicine.

The brain-machine interface systems “provide a direct communication link between the brain and a computer, which decodes neural signals and ‘translates’ them to perform various external functions.”

Researchers are combining robot autonomy with limited human input. Dr. David Handelman, the study’s first author and a senior roboticist in the Intelligent Systems Branch of the Research and Exploratory Development Department at APL, says in a statement that the machine does most of the work while enabling the user to customize robot behavior to their liking.

“In order for robots to perform human-like tasks for people with reduced functionality, they will require human-like dexterity. Human-like dexterity requires complex control of a complex robot skeleton,” explains Dr. Handelman. “Our goal is to make it easy for the user to control the few things that matter most for specific tasks.”

In this specific task, the paralyzed man was able to eat a piece of cake by making subtle motions with his right and left fists at certain prompts to work the robotic arms, like when “selecting cut location,” so the machine was able to slice off a bit-sized piece of dessert using a fork and knife. The man then used a subtle gesture to align the fork with his mouth in order to eat. While this is happening, a computerized voice is announcing each action, like “moving fork to food” and “retracting knife.”

The process took 90 seconds. Researchers say the study reveals that humans are able to maneuver a pair of robotic arms with minimal mental input due to shared control.

“This shared control approach is intended to leverage the intrinsic capabilities of the brain machine interface and the robotic system, creating a ‘best of both worlds’ environment where the user can personalize the behavior of a smart prosthesis,” says Dr. Francesco Tenore, study senior author and a senior project manager in APL’s Research and Exploratory Development Department. “Although our results are preliminary, we are excited about giving users with limited capability a true sense of control over increasingly intelligent assistive machines.

APL and Johns Hopkins School of Medicine researchers are still collaborating with colleagues at other institutions to demonstrate and explore the potential of the shared control technology, despite the DARPA program ending in August 2020.

Moving beyond the shared control approach, researchers may integrate previous research that allowed amputees to use muscle movement signals from the brain to control a prosthetic. With the addition of sensory feedback that’s delivered straight to a person’s brain, it may help them perform some tasks without requiring the constant visual feedback in the current experiment.

“This research is a great example of this philosophy where we knew we had all the tools to demonstrate this complex bimanual activity of daily living that non-disabled people take for granted,” says Dr. Tenore. “Many challenges still lie ahead, including improved task execution, in terms of both accuracy and timing, and closed-loop control without the constant need for visual feedback.”

The study is published in the journal Frontiers in Neurorobotics.