Childhood stories may have helped us read when we were little, and now can also help us understand how the brain encodes and understands speech. Researchers at the University of Rochester Medical Center are using rhymes from Dr. Seuss’s children’s books — in particular, The Lorax — to map out how the brain reacts when listening to and watching a narrator tell a story.

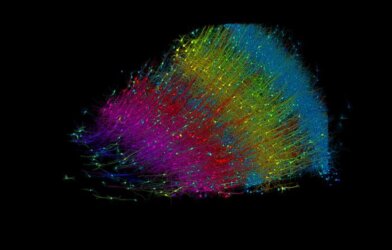

They found stories activate a network of brain regions involved in sensory processing, multisensory integration, and cognitive functions, which may have to do with understanding the contents of the story. Understanding the significance of each brain area could help give researchers new ways to explore the causes behind certain neurodevelopmental disorders.

“Multisensory integration is an important function of our nervous system as it can substantially enhance our ability to detect and identify objects in our environment,” says lead author Lars Ross, PhD, a research assistant professor of Imaging Sciences and Neuroscience, in a university media release. “A failure of this function may lead to a sensory environment that is perceived as overwhelming and can cause a person to have difficulty adapting to their surroundings, a problem we believe underlies symptoms of some neurodevelopmental disorders such as autism.”

The team closely observed the brain activity of 53 participants using fMRI while they watched a video recording of a narrator reading Dr. Seuss’s The Lorax. The story was randomly told in one of four ways: audio only, visual only, synchronized audiovisual, or unsynchronized audiovisual. While watching the video, the team tracked people’s eye movements.

As expected, brain activity involved in multisensory integration was activated during the storytelling. But the team also found that people who could see the speaker’s facial movements also showed greater brain activity in the broader semantic network and extralinguistic regions. The researchers note that these areas, the amygdala and the primary visual cortex, are not usually involved with multisensory integration.

What’s more, the fMRI picked up brain activity in the thalamus, which is associated with the early stages when the sensory information collected from the ears and eyes interacts.

“This suggests many regions beyond multisensory integration play a role in how the brain processes complex multisensory speech—including those associated with extralinguistic perceptual and cognitive processing,” explains Dr. Ross.

The team is looking to design this experiment with children, and has already begun work looking into the brain activity of those with autism spectrum disorder to get a better sense of how audiovisual speech develops.

“Our lab is profoundly interested in this network because it goes awry in a number of neurodevelopmental disorders,” adds John Foxe, PhD, lead author of this study. “Now that we have designed this detailed map of the multisensory speech integration network, we can ask much more pointed questions about multisensory speech in neurodevelopmental disorders, like autism and dyslexia, and get at the specific brain circuits that might be impacted.”

The study is published in NeuroImage.